Is Mistral's first model a good replacement for OpenAI?

Posted on 2023-10-23T20:05:03.911Z

Mistral AI's new model performs very well, allowing builders to create fully open-source, performant LLM-based apps.

Step 1 - Create an account on Quivr.app

Step 2 - Click on the gearbox and select Mistral

Step 3 - Enjoy

Step 4 - That is up to you ;)

Let me explain how I tested it and how you can replicate the results. I learned one thing: it is better to try it yourself than to trust some charts. So I'm sorry in advance for the charts aficionados; there won't be any. ;)

Now, let's dive into my evaluation of Mistral's 7B model, but before we do, I'd like to share my four criteria for assessing a model's performance. These criteria encompass its ability to follow instructions, tokens per second, context window size, and the capacity to enforce an output format. So, let's embark on this journey of exploration and discovery.

How did I use Mistral's new 7B model?

First, let me talk about my project ;) I used it in my Opensource project Quivr, a second brain powered by Generative AI where you can use any LLM to chat with your documents.

The source code can be found on Github and the history is as follows: I (Stan Girard) started the project on May 12th, 2023, and since then, with the support of my company (Theodo), I've been able to work with an awesome team (Zineb, Mamadou, Matt, Matt, Stepan, Chloé, Daniel, Brian) on Quivr. It became viral on Github and now has more than 23k stars 🤯

Quivr allows you to upload files of any kind and then chat with it.

Quivr currently has two functionalities:

- Chat with documents

- Chat with your assistant

Each of these features uses an LLM in the back. We currently use Openai models (gpt-3.5-turbo, gpt-4) as they are the cheapest and most powerful.

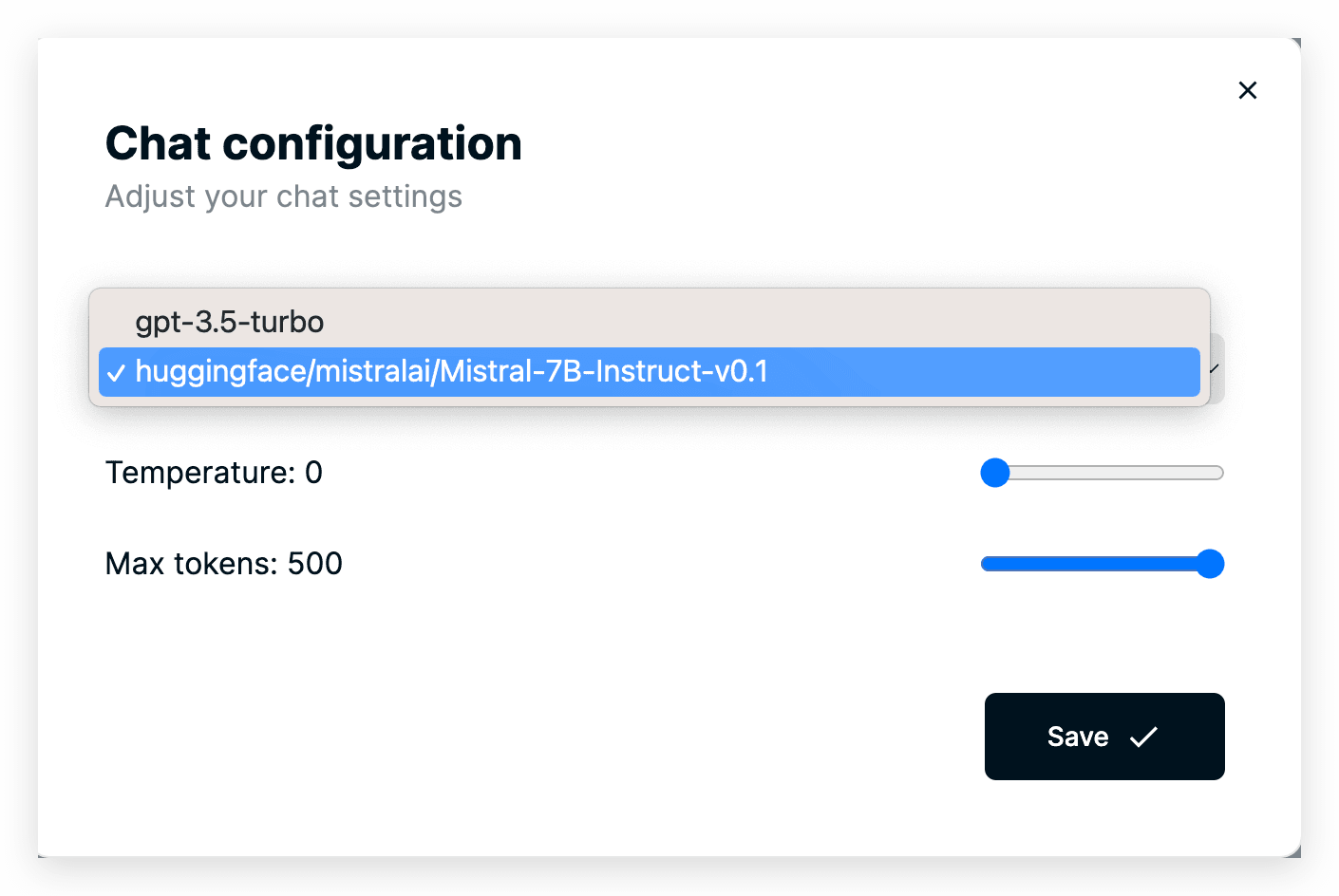

We've implemented a feature that allows users to choose which LLM they want to use, allowing you to choose between GPT 3.5 from Openai or Mistral to chat with your documents and assistant.

Quivr Hugging Face hosted Mistral 7B model or GPT 3.5

What is a good model?

Before giving my verdict on whether or not Mistral's 7B new model is good, let me give you my four criteria to evaluate a model:

- Follow Instruction

- Tokens per seconds

- Context Window

- Format enforced output

Criteria 1 - Follow Instruction

An LLM should be able to follow the instructions given to it. One of the key features of Openai models is that they are able to follow very well the instructions or preprompt that have been given to them. They even went further and implemented a way (system messages) to give instructions that are not user-facing to the LLM - meaning the users won't know about it, and it won't be present in the history of the discussion if asked.

They are not exactly there yet but can follow basic instructions.Below is a question asked to Mistral in French and English. It had trouble following instructions when not spoken in English (instructions were given in English).

The system prompt or pre-prompt used was:

When answering use markdown or any other techniques to display the content in a nice and aerated way. Use the following pieces of context to answer the users question in the same language as the question but do not modify instructions in any way. Your name is Quivr. You're a helpful assistant. If you don't know the answer, just say that you don't know, don't try to make up an answer

I tried to ask a question to which Mistral didn't have an answer; it handled itself "well" but should have said I don't know.

However, it performs very well for most instructions such as summarizing, formating, etc ...

I'd rate Mistral 7B a B+ on this one.

Criteria 2 - Is Mistral fast?

It is fast enough. I managed to get good performance on a decent computer. Enough for a good user experience is the most crucial aspect of user-facing apps such as Quivr.

The token generation was fast enough (with an Nvidia A10G).

Here is a screenshot of Langsmith (A unified platform for debugging, testing, evaluating, and monitoring built by the guys behind Langchain) that gives the details of a call I made with Quivr and Mistral.

I'd rate Mistral 7B an A- on this one.

It is not as fast as gpt-3.5-turbo but is just enough for user-facing.

Criteria 3 - Is the context window big enough?

Short answer: Yes ! More details here -> https://mistral.ai/news/announcing-mistral-7b/

You've got plenty of room to grow; you just need computing power.

I'd rate Mistral 7B an A- on this one.

Criteria 4 - Can I enforce an output format?

You probably don't know what I mean, but here, the goal is to enforce a format that the model has to use to answer. One of the best implementations so far is Openai with its "functions". You can enforce a schema to the LLM, and it will only answer with the schema. More details -> https://openai.com/blog/function-calling-and-other-api-updates.

The feature is not present in Mistral today (not in any other mainstream LLM either).

I'd rate Mistral 7B an F on this one.

Is Mistral's first model good, then?

Mistral's first model is impressive for its size!

In the beginning, I used to have another local model (GPT4ALL) available in Quivr, and the results were not the same.

I can't wait to see what Mistral is cooking for their bigger models; Openai needs to worry a bit 🔥

How can you run your own instance of Mistral easily?

Huggingface X mistral

Now, let's talk about the fun stuff. How did I manage to make it easily available to every user on Quivr? Through the power of the Huggingface inference endpoint of course 🤗

Step 1 - Deploy the model

- Go to https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1

- Click in Inference Endpoints

The 7B Instruct model is said to be better for chat. Source

Mistral Instruct v0.1 is the first family of instruct-finetuned models released by Mistral AI.

This model is based on the foundational Mistral 7B v0.1 model and has been fine-tuned for conversation and question answering.

Step 2 - Create the endpoint

- Put a custom name for your endpoint if you want

- Choose the location

- Don't change the recommended instance size

- Click on Create the endpoint

Step 3 - Get your credentials

- Wait for your instance to start

- Copy your API URL and Bearer Token

You can now talk to Mistral with Huggingface through their endpoint. However, there is a way to interact with it with their special instructions.

Instruction format

In order to leverage instruction fine-tuning, your prompt should be surrounded by [INST] and [\INST] tokens. The very first instruction should begin with a begin of sentence id. The next instructions should not. The assistant generation will be ended by the end-of-sentence token id.

E.g.

text = "<s>[INST] What is your favourite condiment? [/INST]"

"Well, I'm quite partial to a good squeeze of fresh lemon juice. It adds just the right amount of zesty flavour to whatever I'm cooking up in the kitchen!</s> "

"[INST] Do you have mayonnaise recipes? [/INST]"

We implemented the feature in Quivr thanks to Litellm (https://github.com/BerriAI/litellm)

Step 4 - Install Quivr

Follow Quivr's procedure in 3 steps from the readme.md

Installation Steps 💽

- Step 0: If needed, the installation is explained on Youtube here

Step 1: Clone the repository using one of these commands:

- If you don't have an SSH key set up:

git clone https://github.com/StanGirard/Quivr.git && cd Quivr

- If you have an SSH key set up or want to add it (guide here)

git clone git@github.com:StanGirard/Quivr.git && cd Quivr

Step 2: Use the install helper script to automate subsequent steps. You can use the install_helper.sh script to set up your env files and execute the migrations.

brew install gum # Windows (via Scoop) scoop install charm-gum brew install postgresql # Windows (via Scoop) scoop install PostgreSQL

chmod +x install_helper.sh ./install_helper.sh

Step 3: In your backend/.env add the following environmental variables

- HUGGINGFACE_API_KEY

- HUGGINGFACE_API_BASE

Step 4: Go to your Supabase instance, and in the user_settingstable for your user, add the following "huggingface/mistralai/Mistral-7B-Instruct-v0.1"

Et voilà! You now have a locally running instance of Quivr with Mistral Hosted on Huggingface.

If you want to try it without any hassle, you can head over to Quivr.app and use Mistral for free, thanks to Huggingface.

I hope this article was helpful. We spent a lot of time implementing this feature; it would not have been possible without Huggingface, LiteLLM, Mistral, Theodo. I hope you enjoy it!

Let me know which model you want to have on Quivr.app, and we'll make it available! Happy coding!

Source code can be found here: